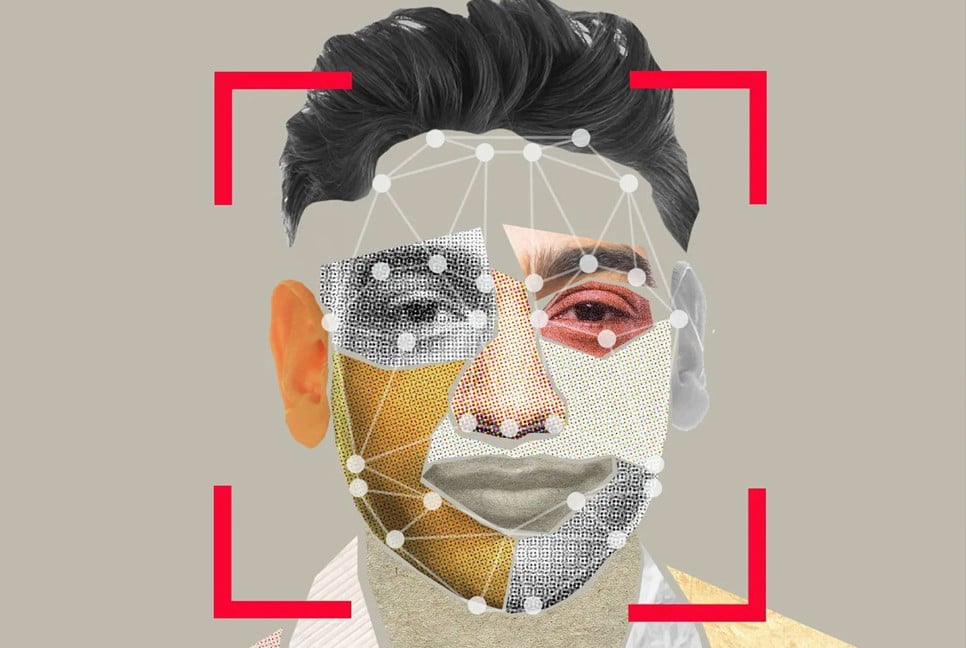

In October, Meta began testing facial recognition tools internationally, aiming to prevent scams using celebrities' images and help users recover compromised Facebook or Instagram accounts. Now, this test is expanding to an additional prominent country.

After initially excluding the United Kingdom from its facial recognition test, Meta launched both tools there on Wednesday. Additionally, in countries where the tools were already available, the "celeb bait" protection is now being expanded to more users, according to the company.

Meta said it got the green light in the U.K. after “after engaging with regulators” in the country — which itself has doubled down on embracing AI. No word yet on Europe, the other key region where Meta has yet to launch the facial recognition tool ‘test’.

“In the coming weeks, public figures in the U.K. will start seeing in-app notifications letting them know they can now opt-in to receive the celeb-bait protection with facial recognition technology,” a statement from the company said. Both this and the new “video selfie verification” that all users will be able to use will be optional tools, Meta said.

Meta has a long history of tapping user data to train its algorithms, but when it first rolled out the two new facial recognition tests in October 2024, the company said the features were not being used for anything other than the purposes described: fighting scam ads and user verification.

“We immediately delete any facial data generated from ads for this one-time comparison regardless of whether our system finds a match, and we don’t use it for any other purpose,” wrote Monika Bickert, Meta’s VP of content policy in a blog post (which is now updated with the detail about the U.K. expansion).

The developments, however, come at a time when Meta is going all-in on AI in its business.

In addition to building its own Large Language Models and using AI across its products, Meta is also reportedly working on a standalone AI app. It has also stepped up lobbying efforts around the technology, and given its two cents what it deems to be risky AI applications such as those that can be weaponized (the implication being that what Meta builds is not risky, never!).

Given Meta’s track record, a move to build tools that fix immediate issues on its apps is probably the best approach to gaining acceptance of any new facial recognition features. And this test fit that bill: as we’ve said before, Meta has been accused for many years of failing to stop scammers misappropriating famous people’s faces in a bid to use its ad platform to spin up scams like dubious crypto investments to unsuspecting users.

Bd-pratidin English/ Afia