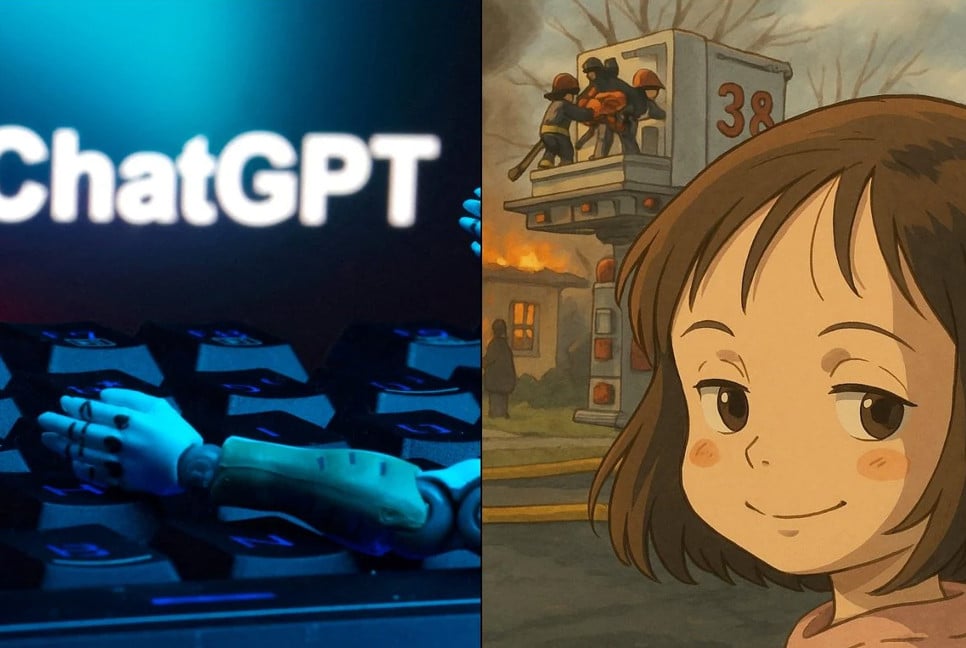

ChatGPT, OpenAI’s AI-powered chatbot, may not be as energy-intensive as previously assumed. According to a new study by Epoch AI, the power consumption of ChatGPT queries is significantly lower than earlier estimates, though its energy demands are still expected to grow over time.

A widely cited statistic suggested that ChatGPT requires around 3 watt-hours of power per query—10 times the energy needed for a Google search. However, Epoch AI’s analysis, using OpenAI’s latest GPT-4o model, estimates that the average query actually consumes only 0.3 watt-hours—comparable to many household appliances.

“The energy use is really not a big deal compared to common appliances, heating or cooling a home, or driving a car,” said Joshua You, the data analyst at Epoch who conducted the study. He noted that previous estimates were based on outdated research that assumed OpenAI used older, less efficient chips to power its models.

Despite this lower-than-expected consumption, AI’s overall energy impact remains a contentious issue. Last week, over 100 organisations signed an open letter urging AI companies and regulators to prevent AI data centres from depleting natural resources and increasing reliance on nonrenewable energy.

Epoch’s estimate of 0.3 watt-hours per query is still an approximation, as OpenAI has not disclosed precise data on its energy usage. The study also did not account for additional power demands from features like image generation or long-form input processing, which likely consume more electricity.

While ChatGPT’s current energy footprint is lower than feared, its power consumption is expected to rise. More advanced AI models require extensive training, which demands significant computing power. Additionally, newer reasoning models, designed to perform complex tasks over longer time frames, will likely increase AI’s energy needs.

According to a Rand report, AI data centres may soon require nearly all of California’s 2022 power capacity (68 GW). By 2030, training a frontier AI model could consume as much power as eight nuclear reactors (8 GW). OpenAI, along with investment partners, plans to spend billions on new AI data centres to support the industry’s rapid expansion.

While OpenAI has developed more energy-efficient models like o3-mini, it remains uncertain whether these advancements will counterbalance AI’s increasing energy demands. For those concerned about their AI-related energy footprint, You suggests using ChatGPT less frequently or opting for smaller, less power-intensive models like GPT-4o-mini.

Source: TechCrunch

Bd-pratidin English/Fariha Nowshin Chinika