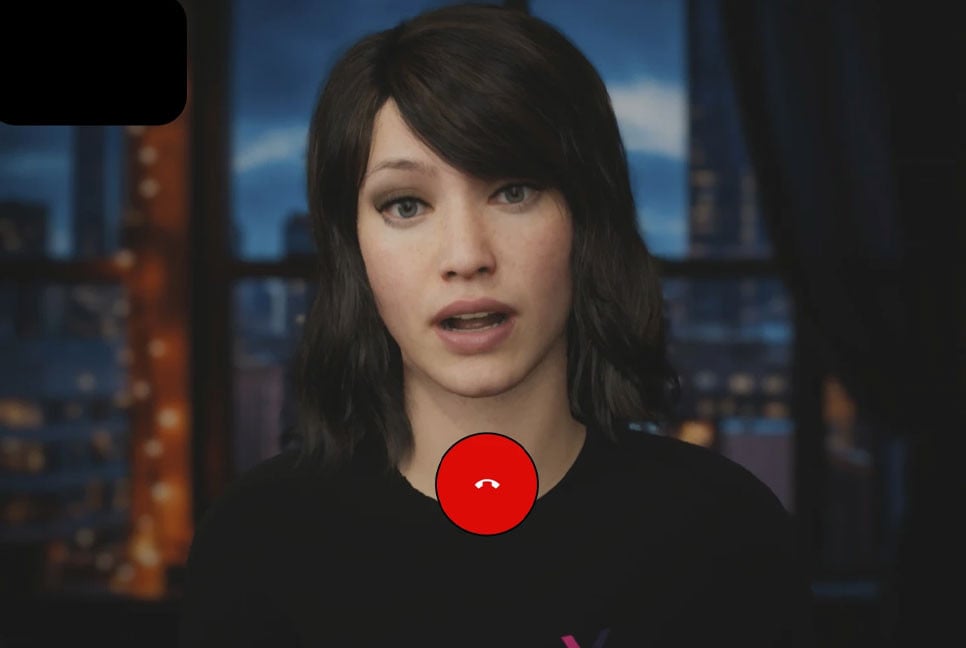

The next time you schedule a medical check-up, you might receive a call from someone like Ana—a reassuring voice helping you prepare for your appointment and answering urgent queries.

With a soothing, friendly manner, Ana is designed to put patients at ease, much like many nurses across the U.S. However, unlike them, she is available around the clock and can communicate in multiple languages, from Hindi to Haitian Creole.

That’s because Ana isn’t a person but an AI-powered program developed by Hippocratic AI, one of several emerging companies focused on automating time-consuming tasks traditionally handled by nurses and medical assistants.

This marks the most visible integration of AI into healthcare, as hundreds of hospitals increasingly rely on advanced computer systems to monitor patient vitals, identify emergencies, and initiate care plans—responsibilities once managed solely by nurses and other healthcare professionals.

Hospitals argue that AI improves efficiency and helps address burnout and staff shortages. However, nursing unions warn that this technology, which is not fully understood, undermines nurses’ expertise and compromises patient care.

“Hospitals have long been waiting for a tool that seems credible enough to replace nurses,” said Michelle Mahon of National Nurses United. “The entire system is being shaped to automate, deskill, and eventually replace caregivers.”

National Nurses United, the largest nursing union in the U.S., has led over 20 protests at hospitals nationwide, demanding the right to influence AI implementation and protections against disciplinary action if nurses override automated recommendations. Concerns escalated in January when incoming health secretary Robert F. Kennedy Jr. suggested AI nurses, "as good as any doctor," could be deployed in rural areas. On Friday, Dr. Mehmet Oz, nominated to oversee Medicare and Medicaid, expressed confidence that AI could “free doctors and nurses from excessive paperwork.”

Hippocratic AI initially advertised its AI assistants at $9 per hour—far below the $40 per hour typically paid to registered nurses. The company has since removed this pricing from its promotional materials, focusing instead on demonstrating its capabilities and assuring customers of rigorous testing. The company declined interview requests.

AI in hospitals can trigger false alarms and risky advice

For years, hospitals have tested various technologies to improve patient care and reduce costs, incorporating tools like sensors, microphones, and motion-detecting cameras. Now, these devices are being connected to electronic medical records and analyzed to predict medical conditions and guide nurses—sometimes even before a patient is examined.

Adam Hart, an emergency room nurse at Dignity Health in Henderson, Nevada, encountered this firsthand when his hospital’s AI system flagged a new patient as potentially having sepsis, a serious infection-related complication. According to protocol, he was required to administer a large dose of IV fluids immediately. However, upon further examination, Hart realized the patient was undergoing dialysis, meaning excessive fluids could strain their kidneys.

When he raised the issue with the supervising nurse, he was told to follow protocol. It wasn’t until a doctor intervened that the patient instead received a controlled IV fluid infusion.

“Nurses are being paid to think critically,” Hart said. “Handing over our decision-making to these systems is reckless and dangerous.”

While nurses acknowledge AI’s potential to assist in patient monitoring and emergency response, they argue that, in practice, it often results in an overwhelming number of false alerts. Some systems even classify basic bodily functions—such as a patient having a bowel movement—as emergencies.

“You’re trying to concentrate on your work, but you keep getting all these notifications that may or may not be significant,” said Melissa Beebe, a cancer nurse at UC Davis Medical Center in Sacramento. “It’s difficult to determine which alerts are accurate because there are so many false ones.”

Can AI be beneficial in hospitals?

Even the most advanced AI systems overlook subtle signs that nurses instinctively notice, such as changes in facial expressions or unusual odors, noted Michelle Collins, dean of Loyola University’s College of Nursing. However, human nurses are not infallible either.

“It would be unwise to dismiss AI entirely,” Collins stated. “We should leverage its capabilities to enhance care, but we must also ensure it does not replace the human touch.”

An estimated 100,000 nurses left the workforce during the COVID-19 pandemic—the largest decline in staffing in four decades. With an aging population and more nurses retiring, the U.S. government projects over 190,000 nursing vacancies annually through 2032.

Given these challenges, hospital administrators see AI as a crucial support system—not to replace human care but to assist nurses and doctors in gathering information and communicating with patients.

‘Sometimes patients are speaking to a human, sometimes they’re not’

At the University of Arkansas Medical Sciences in Little Rock, staff make hundreds of calls each week to prepare patients for surgery. Nurses confirm prescription details, heart conditions, and other concerns—such as sleep apnea—that must be addressed before anesthesia.

The challenge is that many patients are only available in the evenings, often during dinner or their children’s bedtime.

“So, we need to contact several hundred patients within a two-hour window—but I don’t want to pay my staff overtime to do that,” explained Dr. Joseph Sanford, the hospital’s health IT director.

Since January, the hospital has been using an AI assistant from Qventus to handle calls, exchange medical records, and summarize information for human staff. Qventus reports that 115 hospitals currently use its technology to improve efficiency, reduce cancellations, and alleviate staff burnout.

Each call begins with a disclaimer identifying the program as an AI assistant.

“We prioritize full transparency so that patients always know whether they are speaking to a person or an AI,” Sanford said.

While Qventus focuses on administrative tasks, other AI developers are aiming for a broader role in healthcare.

Israeli startup Xoltar has created lifelike avatars capable of conducting video calls with patients. In collaboration with the Mayo Clinic, the company is developing an AI assistant to teach cognitive techniques for managing chronic pain. Additionally, Xoltar is working on an AI avatar designed to assist smokers in quitting. Early trials show patients spending an average of 14 minutes interacting with the program, which can detect facial expressions, body language, and other cues.

Nursing experts studying AI believe these tools might be effective for relatively healthy individuals who proactively manage their health. However, that doesn’t apply to most patients.

“It’s the seriously ill who account for most healthcare needs in the U.S., and we need to carefully assess whether chatbots are truly suited for those cases,” said Roschelle Fritz of the University of California Davis School of Nursing.

Source: AP

Bd-pratidin English/ Jisan